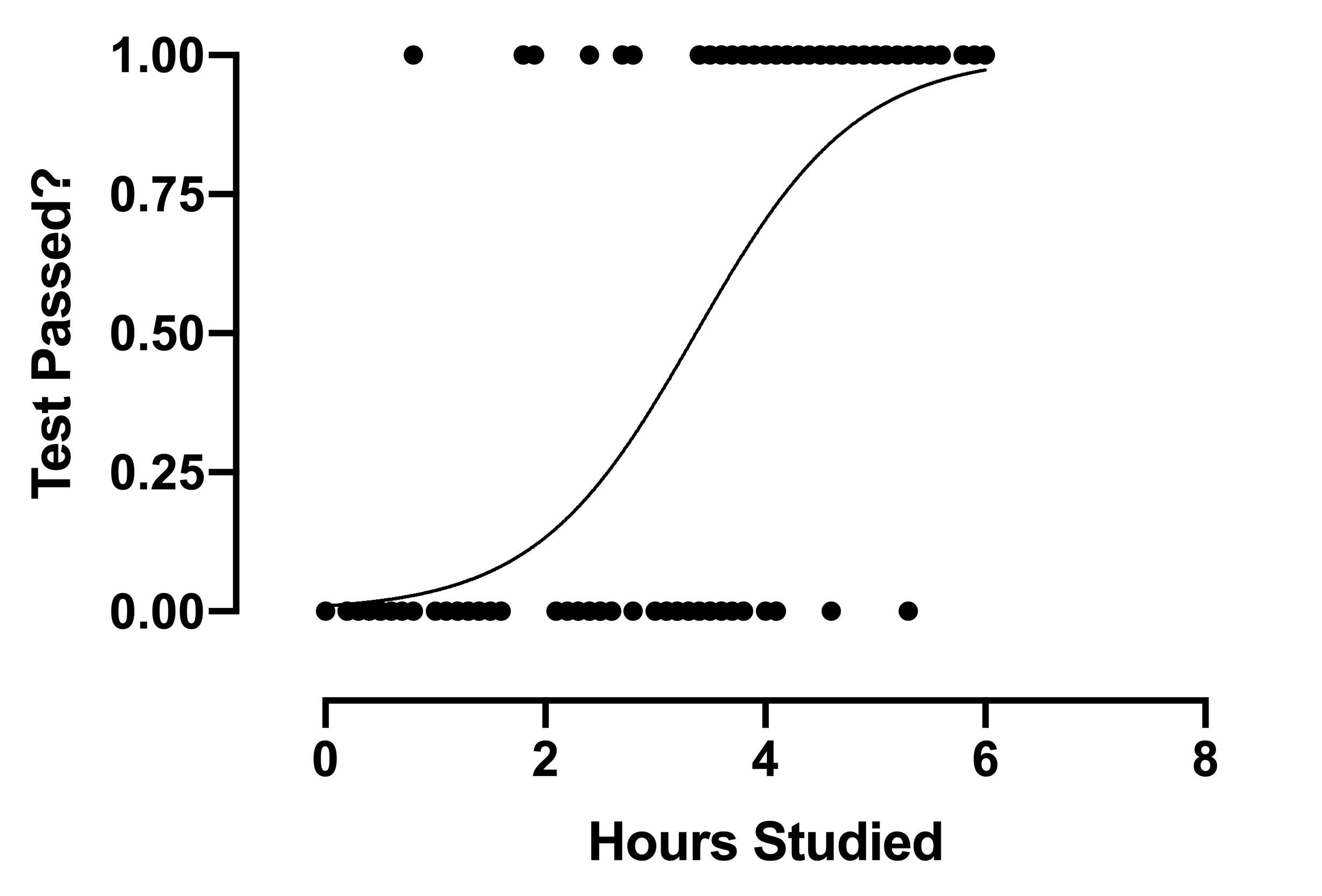

Linear regression works by fitting a model that you can use to determine the actual value of Y, given a value of X. This model provides information on the relationship between these two variables and answers the question: how much does the value of Y change with a change in X? In other words, using a linear regression model that defines the data well, you could predict the value of an outcome reasonably well just by knowing the value of the predictor. In contrast, logistic regression models the probability of observing a success, given the value of the predictor. Take the data shown below as an example:

In this plot, all of our data points take either the value 0 (fail) or 1 (pass). The logistic fit is the S-curve that models the probability of success as a function of hours of study. In this example, instructors will be glad to observe that few students who studied 4 hours failed the exam. Indeed, for a student who studied 4 hours, the model predicts the probability of passing to be around 70%.

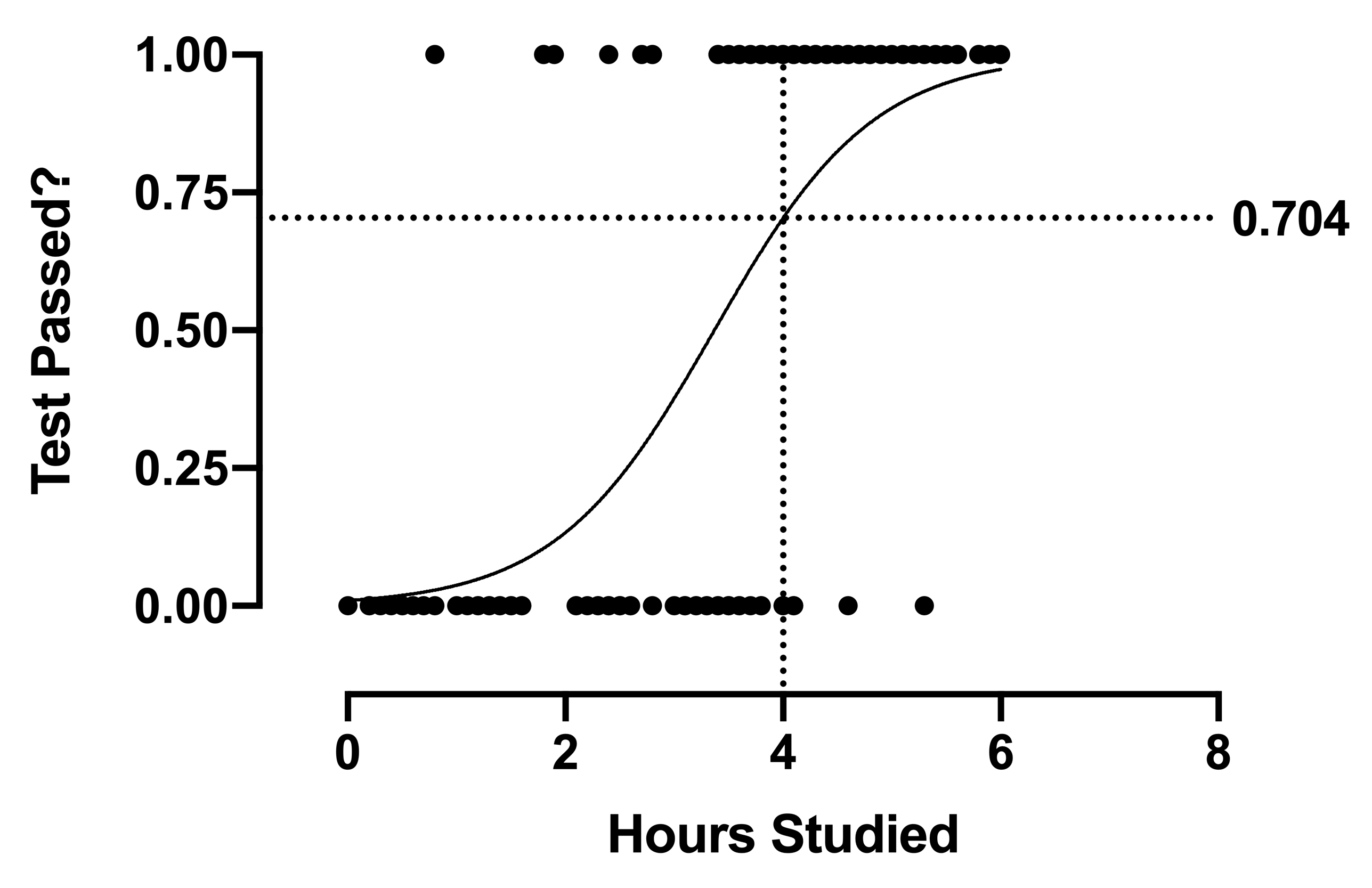

The S-curve is a byproduct of the way the logistic function estimates the probability. Note that probabilities are bound between 0 and 1, which makes sense: you can’t have a “negative probability” of an event happening, and a probability greater than 100% also doesn’t make any sense. As such, the upper and lower bounds of the S-curve are also limited by these values. But what this means is that - unlike with linear regression - the values we get from the model don’t give us direct estimates for the values we expect to observe. At X = 4, the value of the model is 0.704. However, for any observation that we make at X = 4, the outcome will ONLY be 0 or 1; the observed value would never be 0.704. The model simply tells us that we can expect ~70% of our outcomes to be 1 at X = 4. This is a critical point to understand for logistic regression.

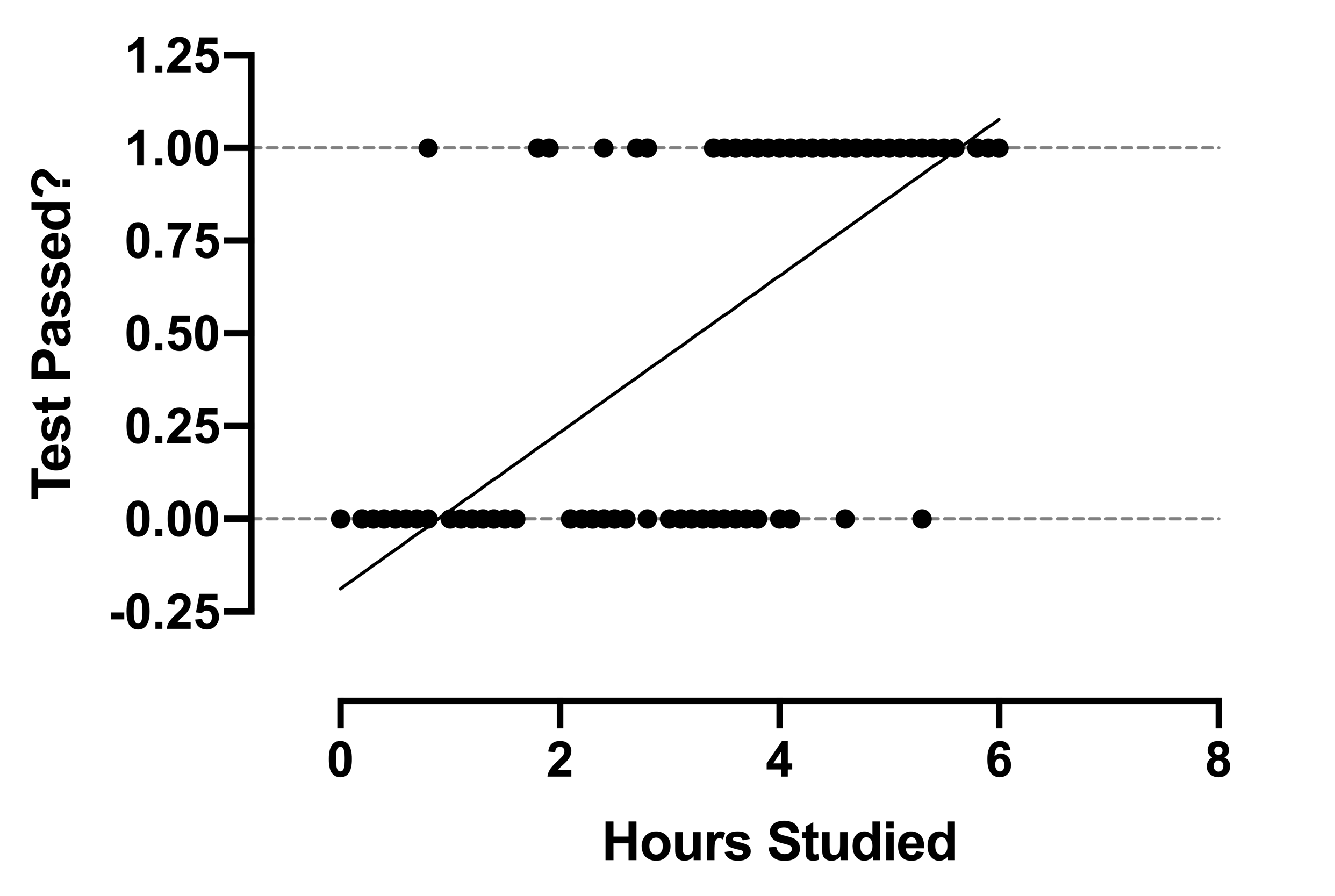

If we were to compare the logistic regression model and the linear regression model on the same data, we would see quickly why the simple linear regression model simply doesn’t work for this kind of data.

Our data are still 0s and 1s, but, unlike the logistic model, the linear model is not predicting the probability of a success. Instead, it predicts values that can be less than 0 and larger than 1. For example, this model predicts that students who studied less than about 0.9 hours have a negative estimated value of passing the test. In some cases, linear models can be used on binary independent variables to do simple classification. However, these approaches cannot provide interpretability of coefficients, tests of significance, and confidence intervals. For these results (when your outcome is binary), use logistic regression.