Now that we know how logistic regression uses log odds to relate probabilities to the coefficients, we can think about what these coefficients are actually telling us. For simple logistic regression (like simple linear regression), there are two coefficients: an “intercept” (β0) and a “slope” (β1). Although you’ll often see these coefficients referred to as intercept and slope, it’s important to remember that they don’t provide a graphical relationship between X and P(Y=1) in the way that their counterparts do for X and Y in simple linear regression. But what do they tell us?

•β0: the log odds when the X variable is 0

•β1: how much the log odds change with an increase (or decrease) in X by 1.0

Let’s consider a practical example. Let’s say our simple logistic regression model was Ln(odds) = -5.5 + 1.2*X. Here, β0 = -5.5 and β1 = 1.2. This means that when X = 0, the log odds equals -5.5. This also tells us that for every 1 unit increase in X, the log odds increases by 1.2 (a 2 unit increase in X results in an increase to the log odds of 2.4, etc.).

Thinking about log odds can be confusing, though. So using the math described above, we can re-write the simple logistic regression model to tell us about the odds (or even about probability).

Odds = eβ0+β1*X

Using some rules for exponents, we can obtain:

Odds = (eβ0)*(eβ1*X)

When X equals 0, the second term equals 1.0. Therefore, eβ0 is the Odds when X is zero. In our example above, when X is zero, Odds is e-5.5, or about 0.009. Additionally, you can see that a 1 unit increase in X results in multiplying the odds by eβ1. Thus, if X is 1, the odds are (e-5.5)*(e1.2) = 0.033. These values (eβ0 and eβ1) are called “odds ratios” and are reported by Prism for simple logistic regression. Note that for the sake of clarity, Prism simply reports the odds ratios as “β0” and “β1”, but numerically, these are actually eβ0 and eβ1, respectively.

The form of the equation relating these coefficients to the probability that Y=1 is shown below. However, the interpretation of these coefficients in this equation is even more challenging than for odds, and so is not included here.

P(Y=1) = (eβ0+β1*X)/(1+eβ0+β1*X)

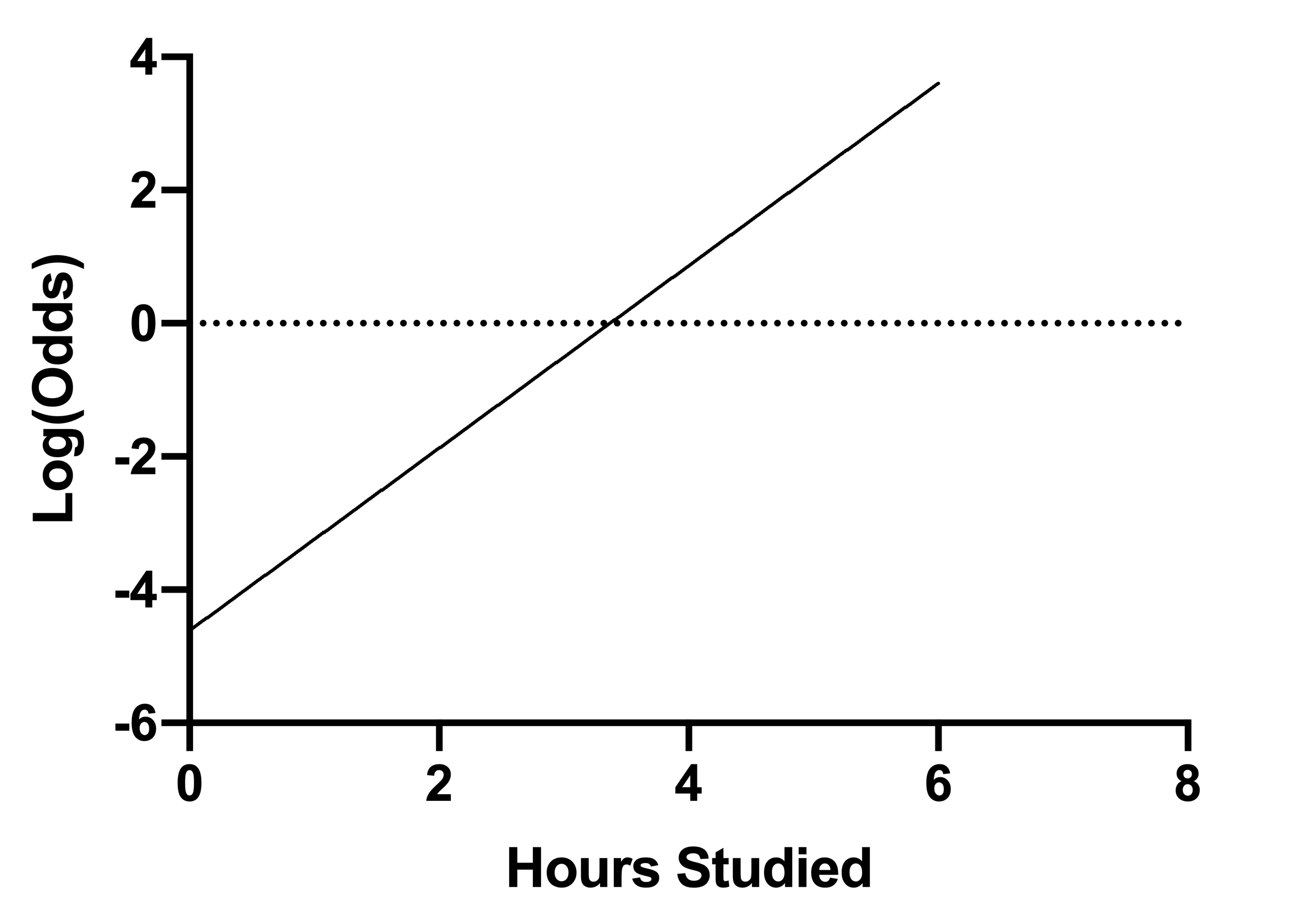

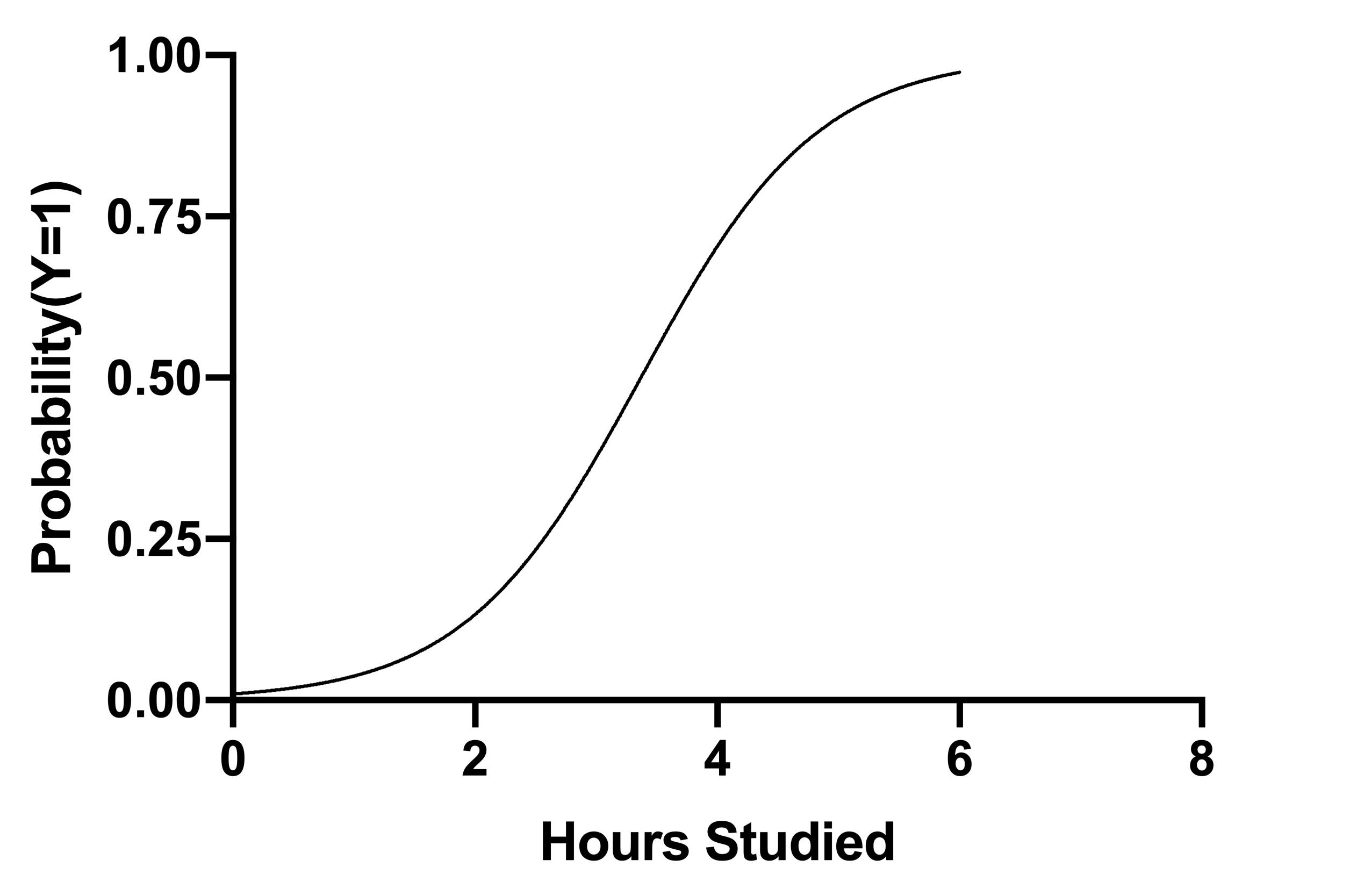

One final point to consider regarding all of these various transformations. While generally the graphical representation of simple logistic regression is the S-shaped logistic curve of probability vs. X, it’s possible using the math shown above to plot the log odds vs. X. If you were to do this, what you would see is that a graph of the log odds vs. X generates a straight line with - you guessed it - an intercept equal to β0 and a slope equal to β1. This is demonstrated graphically below:

[Probability Y = 1] vs. X

β0 = -4.614, β1 = 1.370

Log(Odds) vs. X

β0 = -4.614, β1 = 1.370