Intertwined parameters

When your model has two or more parameters, as is almost always the case, the parameters can be intertwined.

What does it mean for parameters to be intertwined? After fitting a model, change the value of one parameter but leave the others alone. this will move the curve away from the points. Now change the other parameter(s) in an attempt to to move the curve close to the data points. If you can bring the curve closer to the points, the parameters are intertwined. If you can bring the curve back to its original position, then the parameters are completely redundant.

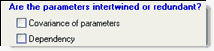

Prism can quantify the relationships between parameters in two ways. If you are in doubt, we suggest that you focus on the dependency values and not bother with the covariance matrix.

Dependency

What is dependency and how do I ask Prism to compute it?

Dependency is reported for each parameter, and quantifies the degree to which that parameter is intertwined with others. Check a check box on the Diagnostics tab of nonlinear regression to view dependencies for each parameter.

Interpreting dependency

The value of dependency always ranges from 0.0 to 1.0.

A dependency of 0.0 is an ideal case when the parameters are entirely independent (mathematicians would say orthogonal). In this case the increase in sum-of-squares caused by changing the value of one parameter cannot be reduced at all by also changing the values of other parameters. This is a very rare case.

A dependency of 1.0 means the parameters are redundant. After changing the value of one parameter, you can change the values of other parameters to reconstruct exactly the same curve. If any dependency is greater than 0.9999, GraphPad labels the fit 'ambiguous'.

With experimental data, of course, the value will almost always lie between these extremes. Clearly a low dependency value is better. But how high is too high? Obviously, any rule-of-thumb is arbitrary. But dependency values up to 0.90 and even 0.95 are not uncommon, and are not really a sign that anything is wrong.

A dependency greater than 0.99 is really high, and suggests that something is wrong. This means that you can create essentially the same curve, over the range of X values for which you collected data, with multiple sets of parameter values. Your data simply do not define all the parameters in your model. If your dependency is really high, ask yourself these questions:

•Can you fit to a simpler model?

•Would it help to collect data over a wider range of X, or at closer spaced X values? It depends on the meaning of the parameters.

•Can you collect data from two kinds of experiments, and fit the two data sets together using global fitting?

•Can you constrain one of the parameters to have a constant value based on results from another experiment?

If the dependency is high, and you are not sure why, look at the covariance matrix (see below). While the dependency is a single value for each parameter, the covariance matrix reports the normalized covariance for each pair of parameters. If the dependency is high, then the covariance with at least one other parameter will also be high. Figuring out which parameter that is may help you figure out where to collect more data, or how to set a constraint.

How dependency is calculated

This example will help you understand how Prism computes dependency.