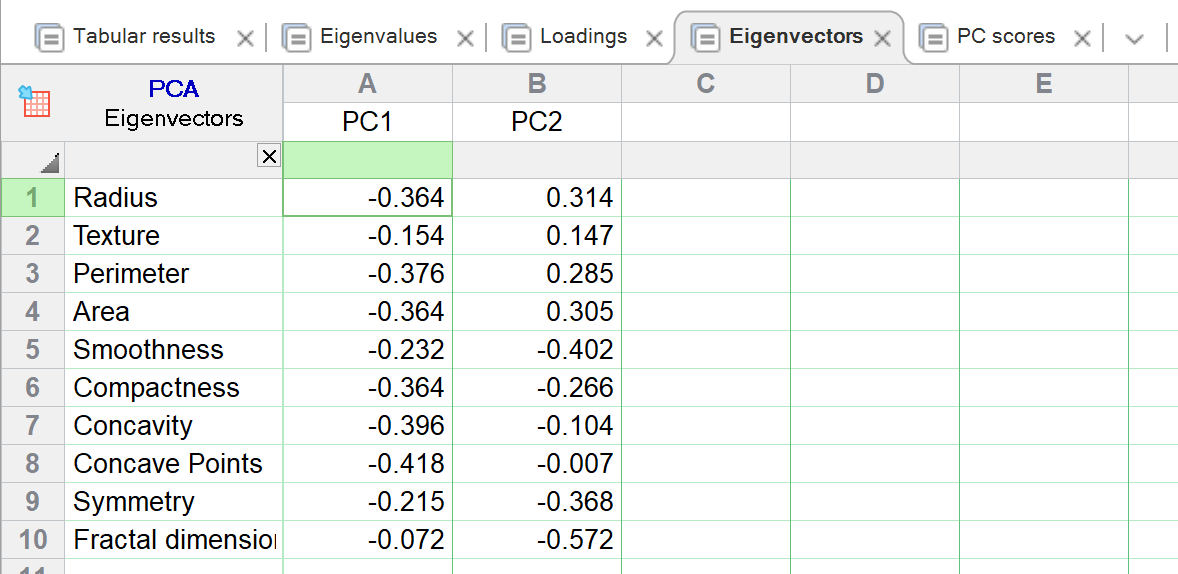

The eigenvectors, also called principal component vectors, are the specific linear combination of the variables.

In the example below:

•PC1 is defined as: -0.364 * Radius - 0.154 * Texture - 0.376 * Perimeter and so on

•PC2 is defined as: 0.314 * Radius + 0.147 * Texture + 0.285 * Perimeter and so on

Eigenvectors are the backbone to principal component analysis (and many other multivariate analyses methods), because they define the vectors that explain the greatest variance in the input data. Each column of values in the table below represents a single eigenvector. Eigenvectors are only shown for PCs that were selected by the chosen selection method.

Note the distinction between eigenvalues and eigenvectors. The eigenvalue for each principal component is represented by a single value (a single number), and quantifies how much of the overall variation that component explains. In contrast, the eigenvector for each principal component has one value (one number) per original variable. These numbers (as explained above) represent the coefficients in the linear combination of variables used to define the principal component.

How are loadings, eigenvectors, and eigenvalues related?

Like this: Loadings = Eigenvector * sqrt(Eigenvalue)