Two primary methods are used to achieve dimensionality reduction: feature selection and feature extraction. Don’t worry too much about the term “feature” here. In machine learning (where PCA is primarily employed), the term “feature” simply means a measurable property, and is often used interchangeably with the term “predictor”. In Prism, you will also see these being referred to simply as variables.

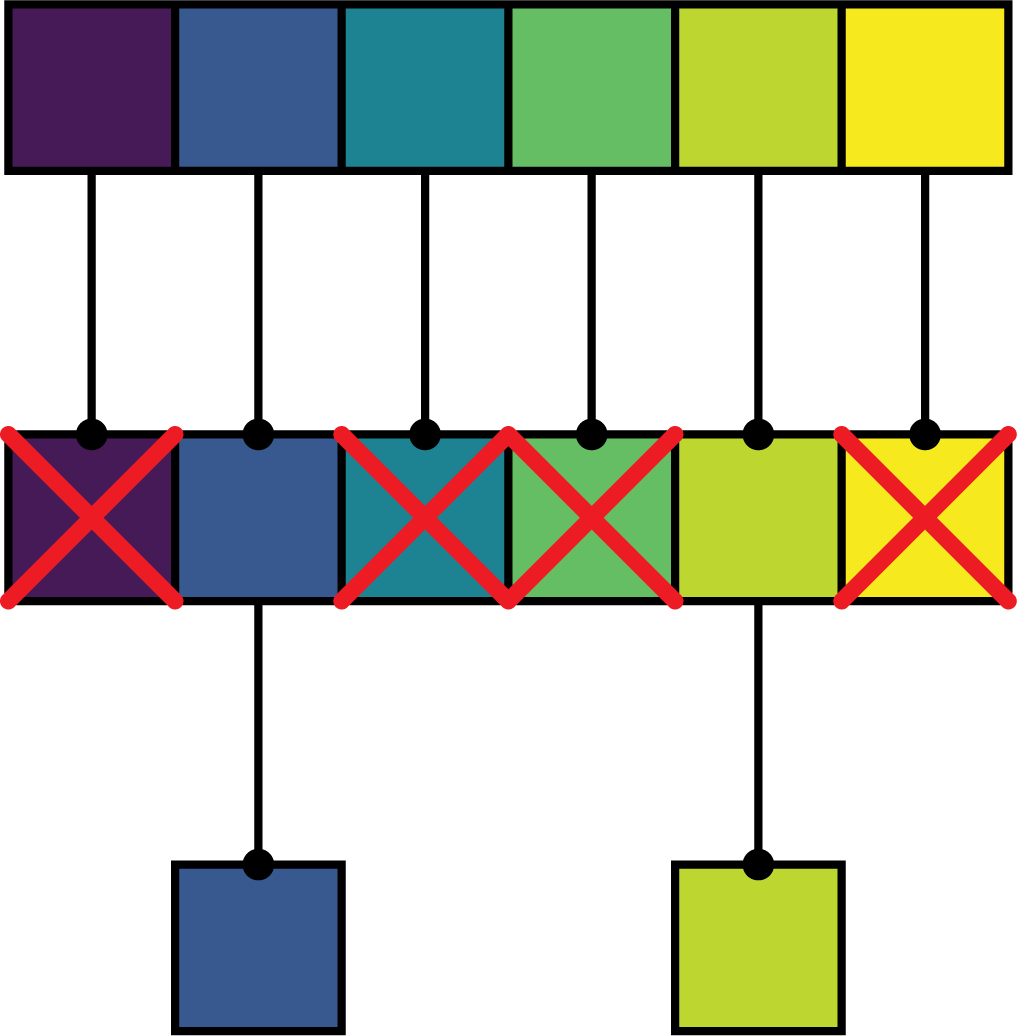

The primary difference between feature selection and feature extraction has to do with how the original variables in the data set are handled. With feature selection, all of the variables are initially considered, then - based on specific criteria - some variables are eliminated. The remaining variables may go through multiple additional rounds of selection, but once the process is complete, the selected variables remain unchanged from how they were presented in the original data.

Feature selection simply chooses the “most important” of the original variables, and discards the rest. Some classic feature selection techniques (notably stepwise, forward, or backward selection) are generally considered to be ill-advised, and Prism does not offer any form of automatic feature selection techniques at this time.

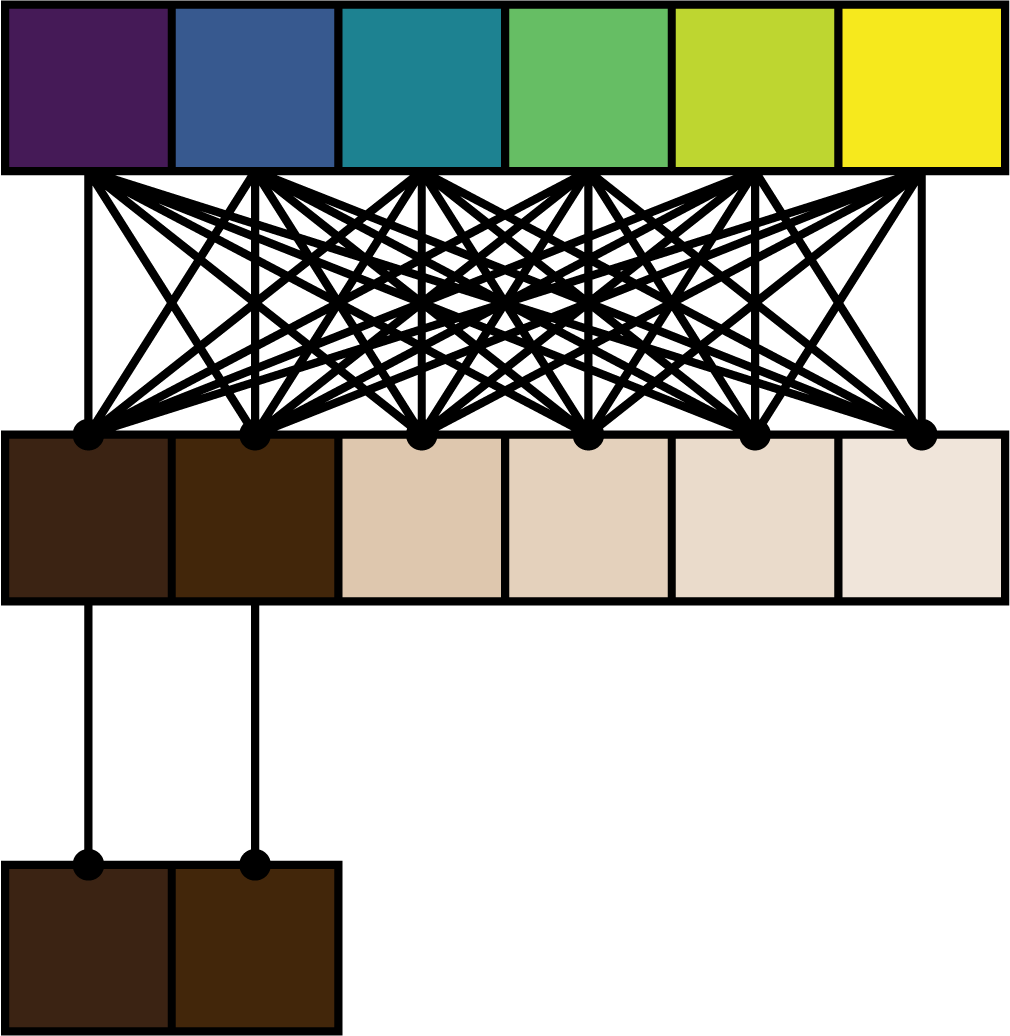

In contrast, feature extraction uses the original variables to construct a new set of variables (or features). Methods for deriving these new features from the original variables can either be linear or nonlinear. PCA is the most commonly used linear method used for feature extraction. With this approach, PCA uses linear combinations of the original variables to derive the new set of features (in PCA, we call these new features the principal components, or PCs).

To understand linear combinations, think about the following fruit punch recipe:

Fruit Punch

8 cups cranberry juice

3 cups pineapple juice

3 cups orange juice

¼ cup lemon juice

4 ¼ cup ginger ale

Another way to look at this is in the form of a linear combination of the ingredients (variables):

Fruit Punch = 8*(cranberry juice) + 3*(pineapple juice) + 3*(orange juice) + 0.25*(lemon juice) + 4.25*(ginger ale)

Each variable is multiplied by a constant (coefficient), and the products are added together. PCA performs a similar process by generating PCs that are linear combinations of the original variables. The really important part of PCA is the way that these PCs are defined, allowing for the original data to be projected into a lower dimensional space while minimizing the loss of information.

The two images below summarize the differences between feature selection (elimination of original variables) and feature extraction (formation of new variables as combinations of the original variables) followed by projection into a lower dimensional space using the new variables which contain the most useful information.